Washington University in St. Louis, Teaching Assistant, Spring 2021-Fall 2024

Since 2020, I have been a TA and Tutor for Washington University in St. Louis. A breakdown of my positions starting from most recent is shown below.

- TA, CSE 560M: Computer Systems Architecture I (Fall 2024)

- Ineligible to TA while in PhD Program due to MTE (Fall 2023 - Summer 2024)

- TA, CSE 347: Analysis of Algorithms (Fall 2022 - Spring 2023)

- Course Admin, CSE 537T: Trustworthy Autonomy (Winter 2023)

- TA, CSE 231S: Parallel and Concurrent Programming (Spring 2023)

- Section Lead, CSE 247: Data Structures and Algorithms (Fall 2022)

- TA, CSE 247: Data Structures and Algorithms (Spring 2021 - Spring 2022)

- Tutor, CSE 131: Introduction to Computer Science (Fall 2020 - Spring 2021)

Zillow, Software Engineer Intern - Mobile, Summer 2023

A video demo of the "Learn More" banner

Over the summer between graduating and starting my PhD program, I interned at Zillow on the Rich Media Experience Mobile team. While at Zillow, I quickly learned the Swift programming language and the accompanying SwiftUI framework for iOS development. I worked closely with a mentor to finish the specifications for a new feature in the "Showcase" listings of Zillow (paid premium listings with HD photos, 3D tours, etc). The feature was a new banner below the listing's photo that read "Learn More about Showcase Listings" and produced a modal with more information about the benefits of using Showcase listings on Zillow. I quickly had the basic modal and banner working within the first month while learning Swift and SwiftUI, and I spent the next month in July adding dynamic data. In particular, the official Zillow app did not have an existing way to deliver static photos that weren't from home listings (such as the Zillow logo) via the internet instead of bundling the images locally. The photos for my feature were large enough that the app size would increase by about 50 megabytes, so to keep the app size down, I met with the development team of the StreetEasy app (a Zillow-owned app) who had a service called the DAM or digital asset management for hosting static photos and got permission for the Zillow app to store the photos for my feature in the DAM and let the Zillow app instead. After adding analytics hooks to track activity, the feature launched in early August under private beta and was publicly released to users in late August.

I also made several bugfixes in the official Zillow App, and I also participated in Zillow's HackWeek, where teams work in one week to rapidly prototype a new feature. I worked on a team of 15 engineers to build a tool called TranZlate, a tool that uses a Large Language Model to translate real estate listings to the user's native language. The project won two first awards at the competition: 1st place in the Social Impact Category and also in the 1st place People's Choice Award for the "Create Confidence for Big Decisions" category.

Garmin, Software/Biosensor Team Intern, Summer 2022

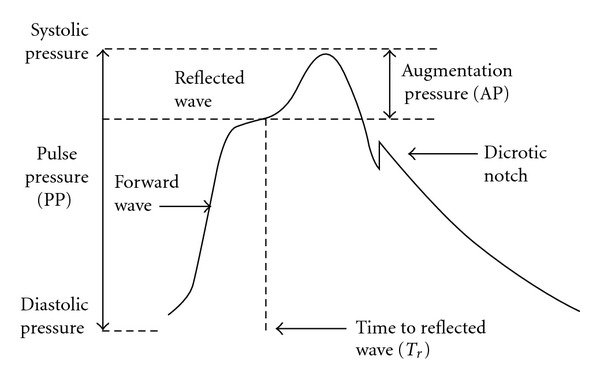

A diagram of a traditional pressure waveform measured by a blood pressure cuff

Intern for Garmin as a member of the Biosensor team at their main Olathe campus. The team was formed as part of a new subdivision called Garmin Health. As an intern, I worked on research and simulator development. Most of my work was on research, but I did help with writing a few patches in C/C++ for the hardware simulators. My research was in optical detection of blood pressure using Garmin's smartwatches. Garmin's current smartwatch sensors emit 2-4 green LEDs at 550 nm using photoplethysmography (PPG) on the wrist and can also use deeper 2-4 infrared lights but draw extra power.

The optical signal's shape is equivalent to a pressure waveform from a blood pressure cuff (the left diagram shows this blood pressure waveform) but the optical measurement presents issues in reliability. While the PPG signal has the same shape, the values of the signal has very little correlation to the actual blood pressure and vary wildly from subject to subject (and even trial to trial). As a result, additional estimation steps and alternative features were prototyped to account for this.

With the help of my mentor, I created instructions for a trial for Garmin's sister team in Taiwan to run and return data quickly for me to build a model with. I had 3 distinct iterations of my pipeline based on different types of signal processing attempted. The results of the final pipeline was moderate statistical correlation and slightly moderate accuracy. Despite a somewhat successful correlation, we ultimately determined that optical estimation with Garmin's existing sensor arrays was not feasible with existing hardware, but that optical estimation shows promise. The conclusion was that the next generation sensor array would need to be improved and that Garmin would include hardware similar to LiveMetric's recently 510k FDA-Approved sensor array.

Something to note is that competitors such as Apple, Samsung, and Withings have had difficulty on similar projects, with only Samsung having mild success releasing blood pressure detection in the asian markets, but not into the North American or European markets where medical device regulations tend to be stricter.

Washington University School of Medicine, Undergraduate Researcher, Summer 2021

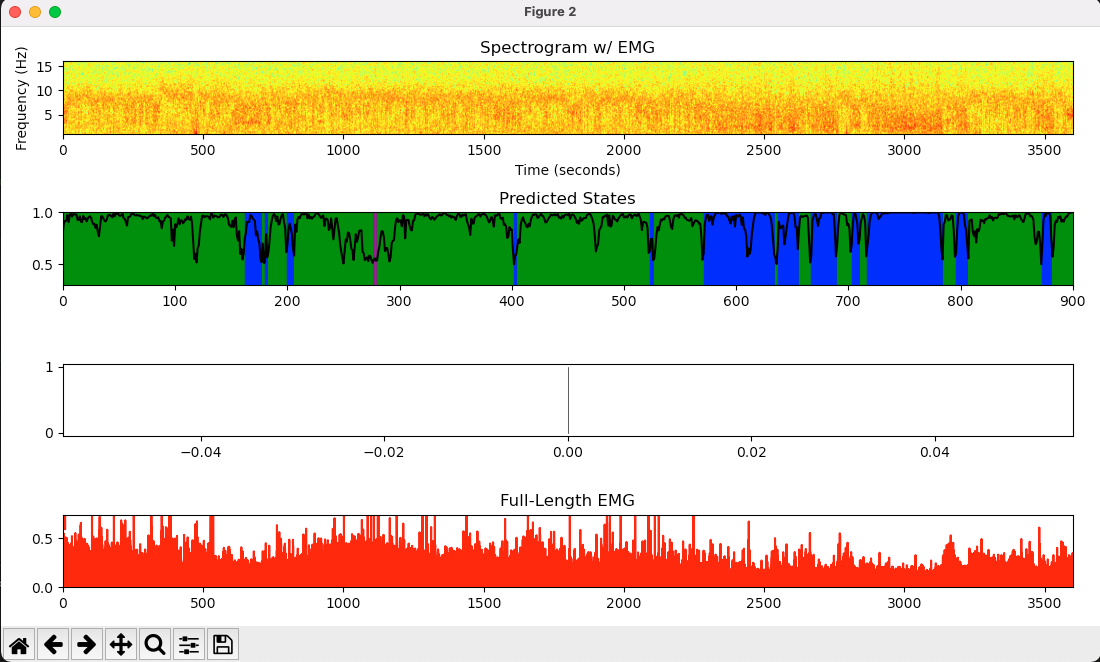

Photo of the interface scoring a sleep experiment

Researcher at the Chen Lab at the Washington University School of Medicine studying sleep in lab mice. As an undergraduate researcher, I developed and maintained a tool to analyze and mice sleep to different states (REM, NREM, etc). I added additional features and modules to a machine learning pipeline written by a graduate student. New features included a system for versioning trained models to track overtraining, giving the model the ability to identify a new state called "quiet wakefulness" to better classify intermediate states of wakefullness, and the ability to dynamically click on a spectrogram and see detailed LFP, EMG, Delta, and Theta signals to closely analyze problem areas the model couldn't identify. In addition to maintaining these features, I became an active member of the lab and attended biweekly journal clubs where I learned and discussed academic papers on various neuromodulation topics.

The project continues to be maintained by the lab since I stopped working there, and a link to the GitHub repository can be found here

Department of Defense: Project Tesseract, Intern, Summer 2020

Project Tesseract is an effort by the United States Department of Defense to gain insights from recruited fellows in academia on emerging fields and for the fellows to work on projects related to the research of emerging technologies.

While an unpaid fellow, I prototyped a vending system for tools to accelerate the speed of maintenance operations on a military base's Flightline. I also worked on a sub-team to develop a system to allow for autonomous robots to operate inside a hangar among humans similar to Amazon fulfillment centers. I also presented a report of the robot system to Brigadier General Linda Hurry, where it was positively received.

OsteoPOP, Engineering Lead, Fall-Spring 2019-2020

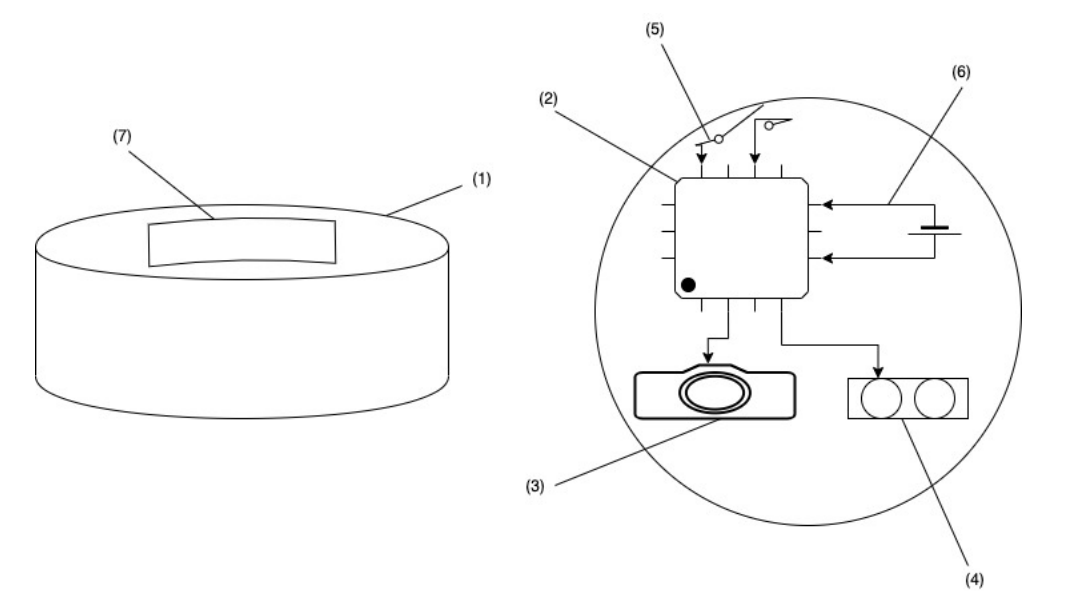

Diagram from the provisional patent

OsteoPOP was a student startup led by Biomedical Engineering Ph.D. student Ian Berke. OsteoPOP focused on finding a solution to the low compliance rate of bisphosphonate medications prescribed to elderly women for the treatment of Osteoporosis.

Our team spanned a wide array of disciplines, including students skilled in Biomedical Engineering like Ian and I, an Electrical and Systems Engineering Student proficient in sensors, two Economics Ph.D. students studying incentives, and MBA students studying the market of a potential solution.

Our solution was a device with an optional pack-in application that tracked compliance and educated the user on the importance of their medication as well as auto-requested a prescription refill for them if they consented to it. As an Engineering Lead, I managed the development schedule and still worked as an engineer helping with the development of a prototype, which included microcontroller programming, Computer-Aided Design (CAD), and App Development. Our final result was simple, yet effective; a pill cap sensor that was equipped with a LIDAR sensor and 3D Dot Projection that could estimate the number of pills left in the bottle accurately enough to determine whether a medication was taken and if a refill would soon be required. The resulting device was filed for a provisional patent co-authored by Evin Jaff, Ian Berke, and Caleb Martin (the three main students working on prototyping the device). The prototype was presented at multiple entrepreneurship events, including TigerLaunch, and Washington University's Olin School of Business' Idea Bounce where it was a finalist in both. The student startup disbanded, however, after Ian was awarded his Ph.D. in the Spring of 2019.

University of Washington School of Medicine, Research Intern, Summer 2019

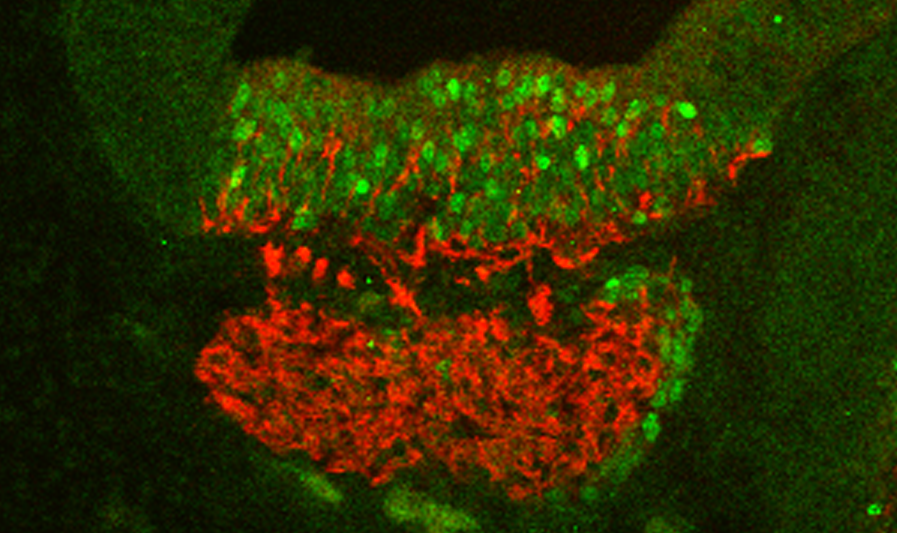

A photo of a sample similar to what I prepared in a recent publication from the lab

While interning at the Stone Lab in the Medical School's Otolaryngology Department, I worked under a graduate student to prepare and analyze inner-ear samples. I learned standard lab skills, as well as complex protocols for how to properly "defrost" biological samples stored for years in sub-zero freezers and the handling of dangerous chemicals such as Formaldehyde. While an intern, I also attended a local research conference and met with colleagues of the lab to learn more about medical research